AWS EKS, or Amazon Elastic Kubernetes Service, is a managed service that simplifies the deployment, management, and scaling of Kubernetes clusters on the AWS cloud. EKS automates Kubernetes server/node provisioning, cluster setup, and updates.

Kubernetes is an open-source platform designed to automate the deployment, scaling, and management of containerized applications. It helps orchestrate containers (e.g. Docker images), allowing you to easily manage and coordinate applications across a cluster of machines.

Kubernetes is cloud-agnostic because it's designed to run applications consistently across various cloud environments. Its architecture abstracts away specific cloud provider details, enabling seamless deployment and management of applications on any cloud platform. This flexibility allows users to avoid vendor lock-in, facilitating the movement of workloads between different cloud providers or even on-premises infrastructure without significant modifications.

This tutorial series focuses on using Terraform/OpenTofu with AWS, emphasizing minimal resource usage and complexity while crafting basic cloud infrastructures through Infrastructure as Code (IaC).

Each component is broken down with explanations and diagrams. Readers will grasp the essentials for handling intricate AWS architectures, laying the foundation for advanced practices like modularization, autoscaling, and CI/CD.

How to Deploy Containers in AWS EKS with Terraform

Install Terraform / OpenTofu, have an AWS account, and install AWS CLI

Terraform Plan to Create the VPC

Create the basic AWS Infrastructure needed for EKS: VPC and Subnets, Internet Gateway and Routing Table.

Terraform Plan to Create EKS Roles

The EKS Service and its nodes requiere authorization to manage AWS and EC2 resources, the Terraform plan creates and attaches Policies and Roles.

Terraform Plan to Create the EKS Cluster

The Amazon EKS cluster runs the control plane nodes’ key components

Terraform Plan to Create the EKS Node Group

The EKS Node Group is a collection of Amazon EC2 instances that run the Kubernetes software required to host your Kubernetes applications.

Init, Plan, and Apply the Terraform Plan

Run the init, plan and apply sub-comands using terraform or opentofu. (it take around 10 minutes)

Configure kubectl to Access the AWS EKS Cluster

Adding the EKS cluster to kubenconfig file allows Kubernetes tools to access the cluster. AWS provides a command to update the kubenconfig.

Deploy a manifest with the Kubernetes command-line tool kubectl apply

Using the port-forward command from kubectl for testing and Using an AWS Load Balancer for production.

Review your AWS account and associated services to avoid incurring any costs after the tutorial. Use kubectl to list and delete resources and terraform destroy.

Error: waiting for EKS Node Group, Error: deleting EC2 VPC.

Explore Terraform modularization, AWS autoscaling, and CI/CD. Use Kubernetes locally for the development of containers.

You need:

In this tutorial, for simplifying learning, the Terraform code will be in a single file and no modules will be used, no remote state storage, two subnets, and limited resources. See other IT Wonder Lab tutorials to learn how to apply best practices for Terraform and AWS using modules and a remote backend.

Create the Terraform infrastructure definition file aws-eks-color-app.tf or download its contents from the GitHub repository for the Terraform Tutorial AWS EKS.

Defines the Terraform version and the AWS required provider. Additionally, it sets the AWS region and the profile (IAM User & Security Credentials) to use. This tutorial uses the Public Docker Repository which doesn't require any configuration for image pulling from the AWS side.

# Copyright (C) 2018 - 2023 IT Wonder Lab (https://www.itwonderlab.com)

#

# This software may be modified and distributed under the terms

# of the MIT license. See the LICENSE file for details.

# -------------------------------- WARNING --------------------------------

# IT Wonder Lab's best practices for infrastructure include modularizing

# Terraform/OpenTofu configuration.

# In this example, we define everything in a single file.

# See other tutorials for best practices at itwonderlab.com

# -------------------------------- WARNING --------------------------------

#Define Terrraform Providers and Backend

terraform {

required_version = "> 1.5"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

#-----------------------------------------

# Default provider: AWS

#-----------------------------------------

provider "aws" {

shared_credentials_files = ["~/.aws/credentials"]

profile = "ditwl_infradmin"

region = "us-east-1" //See BUG https://github.com/hashicorp/terraform-provider-aws/issues/30488

}Creates a VPC (Virtual Private Cloud) named ditlw-vpc and two public subnets named ditwl-sn-za-pro-pub-00 and ditwl-sn-zb-pro-pub-04.

AWS EKS requires two subnets in different Availability Zones. It is recommended to provide private subnets for the EKS cluster, in this example will be using public subnets for simplicity (allows inbound Internet access to the cluster without a load balancer and outbound without a NAT gateway). Check AWS EKS Network requirements.

Names are used to identify the AWS resources and be a reference for Terraform dependencies. For example, the subnet references the VPC ID using aws_vpc.ditlw-vpc.id. Names follow AWS tagging and Naming Best Practices.

The subnets will be located in two different AWS availability zones in the US East (N. Virginia) region: us-east-1a and us-east-1b.

The subnets are public meaning that:

# VPC

resource "aws_vpc" "ditlw-vpc" {

cidr_block = "172.21.0.0/19" #172.21.0.0 - 172.21.31.254

enable_dns_hostnames = true #The VPC must have DNS hostname and DNS resolution support

tags = {

Name = "ditlw-vpc"

}

}

# Subnet Zone: A, Env: PRO, Type: PUBLIC, Code: 00

resource "aws_subnet" "ditwl-sn-za-pro-pub-00" {

vpc_id = aws_vpc.ditlw-vpc.id

cidr_block = "172.21.0.0/23" #172.21.0.0 - 172.21.1.255

map_public_ip_on_launch = true #Assign a public IP address

availability_zone = "us-east-1a"

tags = {

Name = "ditwl-sn-za-pro-pub-00"

}

}

# Subnet Zone: B, Env: PRO, Type: PUBLIC, Code: 04

resource "aws_subnet" "ditwl-sn-zb-pro-pub-04" {

vpc_id = aws_vpc.ditlw-vpc.id

cidr_block = "172.21.4.0/23" #172.21.4.0 - 172.21.5.255

map_public_ip_on_launch = true #Assign a public IP address

availability_zone = "us-east-1b"

tags = {

Name = "ditwl-sn-zb-pro-pub-04"

}

}Creates an Internet Gateway named ditwl-ig that allows the EKS Container Internet access needed to download the Container image from the Public Docker Hub repository and Internet users to access the Public IP assigned to the container by AWS EKS.

A routing table named ditwl-rt-pub-main and a rule to access the Internet is added. The rule sends all traffic (except in the CIDR range "172.21.0.0/19") to the Internet Gateway.

The new routing table is added as the main (or default) routing table for the VPC, applying as the default routing table for all subnets in the VPC that don't specify a routing table. In a production VPC environment, the default route will not include Internet access as a security best practice.

# Internet Gateway

resource "aws_internet_gateway" "ditwl-ig" {

vpc_id = aws_vpc.ditlw-vpc.id

tags = {

Name = "ditwl-ig"

}

}

# Routing table for public subnet (access to Internet)

resource "aws_route_table" "ditwl-rt-pub-main" {

vpc_id = aws_vpc.ditlw-vpc.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.ditwl-ig.id

}

tags = {

Name = "ditwl-rt-pub-main"

}

}

# Set new main_route_table as main

resource "aws_main_route_table_association" "ditwl-rta-default" {

vpc_id = aws_vpc.ditlw-vpc.id

route_table_id = aws_route_table.ditwl-rt-pub-main.id

}The EKS Service and its nodes requiere authorization to manage AWS and EC2 resources. Authorization is done by creating Roles and attaching Managed Policies to those roles. The roles are then attached to EKS and EKS Node services.

AWS Identity and Access Management (IAM) Roles are attached to AWS entities to define what they can do, acting as set of permissions that allow or deny access to AWS resources.

Managed Policies in IAM are pre-built sets grouped permissions. These policies can be either AWS managed (created and managed by AWS) or customer managed (created and managed by the user).

Roles help define who or what can do specific actions, managed policies provide the rules or permissions defining what actions are allowed or denied.

EKS requires an IAM Role that allows managing AWS resources.

The ditwl-role-eks-01 Role allows the EKS cluster access to other AWS service resources that are required to operate clusters managed by EKS, access is granted by attaching an AWS-managed policy identified by its ARN arn:aws:iam::aws:policy/AmazonEKSClusterPolicy to the role.

An AWS ARN (Amazon Resource Name) is a unique identifier for AWS resources such as EC2 instances, S3 buckets, IAM roles, and more. It's a structured string that includes information like the AWS service, region, account ID, resource type, and a unique identifier for the resource. ARNs are used for resource identification and access control across AWS services.

# EKS Cluster requires an IAM Role that allows managing AWS resources

data "aws_iam_policy_document" "ditwl-ipd-eks-01" {

statement {

effect = "Allow"

principals {

type = "Service"

identifiers = ["eks.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

# Role

resource "aws_iam_role" "ditwl-role-eks-01" {

name = "ditwl-role-eks-01"

assume_role_policy = data.aws_iam_policy_document.ditwl-ipd-eks-01.json

}

# Attach Policy to Role

resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.ditwl-role-eks-01.name

}EKS Node Group requires an IAM Role that allows managing EC2 resources.

The ditwl-role-ng-eks-01 role allows the EKS Node Group access to other EC2 resources that are required to launch EC2 instances that will be part of the EKS cluster as nodes.

Access is granted by attaching the AWS-managed policies identified by their ARN (arn:aws:iam::aws:policy/...)

AmazonEKSWorkerNodePolicy: Allows Amazon EKS worker nodes to connect to Amazon EKS Clusters.AmazonEKS_CNI_Policy: Permissions required to modify the IP address configuration of EKS worker nodes.AmazonEC2ContainerRegistryReadOnly: Allows access to the Amazon Elastic Container Registry (Amazon ECR), equivalent to the Docker Public Container Registry.# EKS Node Group Assume Role, manage EC2 Instances

data "aws_iam_policy_document" "ditwl-ipd-ng-eks-01" {

statement {

effect = "Allow"

principals {

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

# IAM Role for EKS Node Group

resource "aws_iam_role" "ditwl-role-ng-eks-01" {

name = "eks-node-group-example"

assume_role_policy = data.aws_iam_policy_document.ditwl-ipd-ng-eks-01.json

}

# Attach Policy to Role for EKS Node Group: AmazonEKSWorkerNodePolicy

resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.ditwl-role-ng-eks-01.name

}

# Attach Policy to Role for EKS Node Group: AmazonEKS_CNI_Policy

resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.ditwl-role-ng-eks-01.name

}

# Attach Policy to Role for EKS Node Group: AmazonEC2ContainerRegistryReadOnly

resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.ditwl-role-ng-eks-01.name

}The Amazon EKS cluster runs the control plane nodes' key components:

The control plane runs in an account managed by AWS, and the Kubernetes API is exposed via the Amazon EKS endpoint associated with the cluster (See remote access to the Kubernetes API). Each Amazon EKS cluster control plane is single-tenant and runs on its own set of Amazon EC2 instances.

AWS runs Amazon EKS endpoints (that give access to the Control plane node/s) in different availability zones to enhance the service's availability and fault tolerance.

By deploying EKS endpoints across multiple availability zones within a region, AWS ensures that the Kubernetes control plane components, such as the API server, and etcd, are distributed across these zones. This setup improves resilience against zone failures and enhances the overall stability of the EKS service.

The Terraform plan creates an EKS Cluster named ditwl-eks-01 in the two previously created subnets ditwl-sn-za-pro-pub-00 and ditwl-sn-zb-pro-pub-04 and assigns the ditwl-role-eks-01 role (by specifying its ARN) to allow the Cluster to assume the needed roles (See EKS IAM Role). Defines a Terraform dependency that instructs Terraform to make sure the ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy has been created before attempting to create or delete the EKS Cluster.

In Terraform, the depends_on parameter is used to create explicit dependencies between resources. When you specify depends_on for a resource, Terraform ensures that the specified resource is created before the dependent resource. This helps in defining the order of resource creation when there are dependencies between them, ensuring proper sequencing during the Terraform execution.

# EKS Cluster

resource "aws_eks_cluster" "ditwl-eks-01" {

name = "ditwl-eks-01"

role_arn = aws_iam_role.ditwl-role-eks-01.arn

vpc_config {

subnet_ids = [aws_subnet.ditwl-sn-za-pro-pub-00.id, aws_subnet.ditwl-sn-zb-pro-pub-04.id]

}

# Ensure that IAM Role permissions are created before and deleted after EKS Cluster handling.

# Otherwise, EKS will not be able to properly delete EKS managed EC2 infrastructure such as Security Groups.

depends_on = [

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy

]

}The EKS Node Group is a collection of Amazon EC2 instances that run the Kubernetes software required to host your Kubernetes applications. These node groups are managed by EKS. Node groups consist of one or more EC2 instances that serve as worker nodes in the EKS cluster, allowing you to deploy and run your containerized applications across these nodes. Node groups can be added, removed, or updated to adjust the capacity or configuration of your EKS cluster.

The Terraform plan creates an EKS Node Group named ditwl-ng-eks-01 in the two previously created subnets ditwl-sn-za-pro-pub-00 and ditwl-sn-zb-pro-pub-04 assigns the ditwl-role-ng-eks-01 role (by specifying its ARN) to allow the EKS Nodes to assume the needed roles (See EKS Node Group IAM Role). It also sets the desired number of compute nodes to 1, the minimum to 1, and the maximum to 2.

Defines a Terraform dependency that instructs Terraform to make sure the three needed policy attachments have been created before attempting to create/delete the EKS Node Group and defines a Terraform ignore_changes lifecycle block to have Terraform ignore desired_size changes.

In Terraform, the lifecycle block within a resource configuration allows you to define certain behaviors related to resource management. It includes parameters like create_before_destroy, prevent_destroy, and ignore_changes, among others.

#EKS Node Group (Worker Nodes)

resource "aws_eks_node_group" "ditwl-eks-ng-eks-01" {

cluster_name = aws_eks_cluster.ditwl-eks-01.name

node_group_name = "ditwl-eks-ng-eks-01"

node_role_arn = aws_iam_role.ditwl-role-ng-eks-01.arn

subnet_ids = [aws_subnet.ditwl-sn-za-pro-pub-00.id, aws_subnet.ditwl-sn-zb-pro-pub-04.id]

scaling_config {

desired_size = 1

max_size = 2

min_size = 1

}

# Ensure that IAM Role permissions are created before and deleted after EKS Node Group handling.

# Otherwise, EKS will not be able to properly delete EC2 Instances and Elastic Network Interfaces.

depends_on = [

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy,

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy,

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly,

]

# Allow external changes without Terraform plan difference

lifecycle {

ignore_changes = [scaling_config[0].desired_size]

}

}

Run the init, plan and apply sub-comands using terraform or opentofu. (it takes around 10 minutes)

$ tofu init

...

$ tofu plan

...

$ tofu apply

data.aws_iam_policy_document.ditwl-ipd-eks-01: Reading...

data.aws_iam_policy_document.ditwl-ipd-ng-eks-01: Reading...

data.aws_iam_policy_document.ditwl-ipd-eks-01: Read complete after 0s [id=3552664922]

data.aws_iam_policy_document.ditwl-ipd-ng-eks-01: Read complete after 0s [id=2851119427]

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

OpenTofu will perform the following actions:

# aws_eks_cluster.ditwl-eks-01 will be created

+ resource "aws_eks_cluster" "ditwl-eks-01" {

+ arn = (known after apply)

+ certificate_authority = (known after apply)

+ cluster_id = (known after apply)

+ created_at = (known after apply)

+ endpoint = (known after apply)

+ id = (known after apply)

+ identity = (known after apply)

+ name = "ditwl-eks-01"

+ platform_version = (known after apply)

+ role_arn = (known after apply)

+ status = (known after apply)

+ tags_all = (known after apply)

+ version = (known after apply)

+ vpc_config {

+ cluster_security_group_id = (known after apply)

+ endpoint_private_access = false

+ endpoint_public_access = true

+ public_access_cidrs = (known after apply)

+ subnet_ids = (known after apply)

+ vpc_id = (known after apply)

}

}

# aws_eks_node_group.ditwl-eks-ng-eks-01 will be created

+ resource "aws_eks_node_group" "ditwl-eks-ng-eks-01" {

+ ami_type = (known after apply)

+ arn = (known after apply)

+ capacity_type = (known after apply)

+ cluster_name = "ditwl-eks-01"

+ disk_size = (known after apply)

+ id = (known after apply)

+ instance_types = (known after apply)

+ node_group_name = "ditwl-eks-ng-eks-01"

+ node_group_name_prefix = (known after apply)

+ node_role_arn = (known after apply)

+ release_version = (known after apply)

+ resources = (known after apply)

+ status = (known after apply)

+ subnet_ids = (known after apply)

+ tags_all = (known after apply)

+ version = (known after apply)

+ scaling_config {

+ desired_size = 1

+ max_size = 2

+ min_size = 1

}

}

# aws_iam_role.ditwl-role-eks-01 will be created

+ resource "aws_iam_role" "ditwl-role-eks-01" {

+ arn = (known after apply)

+ assume_role_policy = jsonencode(

{

+ Statement = [

+ {

+ Action = "sts:AssumeRole"

+ Effect = "Allow"

+ Principal = {

+ Service = "eks.amazonaws.com"

}

},

]

+ Version = "2012-10-17"

}

)

+ create_date = (known after apply)

+ force_detach_policies = false

+ id = (known after apply)

+ managed_policy_arns = (known after apply)

+ max_session_duration = 3600

+ name = "ditwl-role-eks-01"

+ name_prefix = (known after apply)

+ path = "/"

+ tags_all = (known after apply)

+ unique_id = (known after apply)

}

# aws_iam_role.ditwl-role-ng-eks-01 will be created

+ resource "aws_iam_role" "ditwl-role-ng-eks-01" {

+ arn = (known after apply)

+ assume_role_policy = jsonencode(

{

+ Statement = [

+ {

+ Action = "sts:AssumeRole"

+ Effect = "Allow"

+ Principal = {

+ Service = "ec2.amazonaws.com"

}

},

]

+ Version = "2012-10-17"

}

)

+ create_date = (known after apply)

+ force_detach_policies = false

+ id = (known after apply)

+ managed_policy_arns = (known after apply)

+ max_session_duration = 3600

+ name = "eks-node-group-example"

+ name_prefix = (known after apply)

+ path = "/"

+ tags_all = (known after apply)

+ unique_id = (known after apply)

}

# aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly will be created

+ resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly" {

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

+ role = "eks-node-group-example"

}

# aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy will be created

+ resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy" {

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

+ role = "ditwl-role-eks-01"

}

# aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy will be created

+ resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy" {

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

+ role = "eks-node-group-example"

}

# aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy will be created

+ resource "aws_iam_role_policy_attachment" "ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy" {

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

+ role = "eks-node-group-example"

}

# aws_internet_gateway.ditwl-ig will be created

+ resource "aws_internet_gateway" "ditwl-ig" {

+ arn = (known after apply)

+ id = (known after apply)

+ owner_id = (known after apply)

+ tags = {

+ "Name" = "ditwl-ig"

}

+ tags_all = {

+ "Name" = "ditwl-ig"

}

+ vpc_id = (known after apply)

}

# aws_main_route_table_association.ditwl-rta-default will be created

+ resource "aws_main_route_table_association" "ditwl-rta-default" {

+ id = (known after apply)

+ original_route_table_id = (known after apply)

+ route_table_id = (known after apply)

+ vpc_id = (known after apply)

}

# aws_route_table.ditwl-rt-pub-main will be created

+ resource "aws_route_table" "ditwl-rt-pub-main" {

+ arn = (known after apply)

+ id = (known after apply)

+ owner_id = (known after apply)

+ propagating_vgws = (known after apply)

+ route = [

+ {

+ carrier_gateway_id = ""

+ cidr_block = "0.0.0.0/0"

+ core_network_arn = ""

+ destination_prefix_list_id = ""

+ egress_only_gateway_id = ""

+ gateway_id = (known after apply)

+ ipv6_cidr_block = ""

+ local_gateway_id = ""

+ nat_gateway_id = ""

+ network_interface_id = ""

+ transit_gateway_id = ""

+ vpc_endpoint_id = ""

+ vpc_peering_connection_id = ""

},

]

+ tags = {

+ "Name" = "ditwl-rt-pub-main"

}

+ tags_all = {

+ "Name" = "ditwl-rt-pub-main"

}

+ vpc_id = (known after apply)

}

# aws_subnet.ditwl-sn-za-pro-pub-00 will be created

+ resource "aws_subnet" "ditwl-sn-za-pro-pub-00" {

+ arn = (known after apply)

+ assign_ipv6_address_on_creation = false

+ availability_zone = "us-east-1a"

+ availability_zone_id = (known after apply)

+ cidr_block = "172.21.0.0/23"

+ enable_dns64 = false

+ enable_resource_name_dns_a_record_on_launch = false

+ enable_resource_name_dns_aaaa_record_on_launch = false

+ id = (known after apply)

+ ipv6_cidr_block_association_id = (known after apply)

+ ipv6_native = false

+ map_public_ip_on_launch = true

+ owner_id = (known after apply)

+ private_dns_hostname_type_on_launch = (known after apply)

+ tags = {

+ "Name" = "ditwl-sn-za-pro-pub-00"

}

+ tags_all = {

+ "Name" = "ditwl-sn-za-pro-pub-00"

}

+ vpc_id = (known after apply)

}

# aws_subnet.ditwl-sn-zb-pro-pub-04 will be created

+ resource "aws_subnet" "ditwl-sn-zb-pro-pub-04" {

+ arn = (known after apply)

+ assign_ipv6_address_on_creation = false

+ availability_zone = "us-east-1b"

+ availability_zone_id = (known after apply)

+ cidr_block = "172.21.4.0/23"

+ enable_dns64 = false

+ enable_resource_name_dns_a_record_on_launch = false

+ enable_resource_name_dns_aaaa_record_on_launch = false

+ id = (known after apply)

+ ipv6_cidr_block_association_id = (known after apply)

+ ipv6_native = false

+ map_public_ip_on_launch = true

+ owner_id = (known after apply)

+ private_dns_hostname_type_on_launch = (known after apply)

+ tags = {

+ "Name" = "ditwl-sn-zb-pro-pub-04"

}

+ tags_all = {

+ "Name" = "ditwl-sn-zb-pro-pub-04"

}

+ vpc_id = (known after apply)

}

# aws_vpc.ditlw-vpc will be created

+ resource "aws_vpc" "ditlw-vpc" {

+ arn = (known after apply)

+ cidr_block = "172.21.0.0/19"

+ default_network_acl_id = (known after apply)

+ default_route_table_id = (known after apply)

+ default_security_group_id = (known after apply)

+ dhcp_options_id = (known after apply)

+ enable_dns_hostnames = true

+ enable_dns_support = true

+ enable_network_address_usage_metrics = (known after apply)

+ id = (known after apply)

+ instance_tenancy = "default"

+ ipv6_association_id = (known after apply)

+ ipv6_cidr_block = (known after apply)

+ ipv6_cidr_block_network_border_group = (known after apply)

+ main_route_table_id = (known after apply)

+ owner_id = (known after apply)

+ tags = {

+ "Name" = "ditlw-vpc"

}

+ tags_all = {

+ "Name" = "ditlw-vpc"

}

}

Plan: 17 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

OpenTofu will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_vpc.ditlw-vpc: Creating...

aws_iam_role.ditwl-role-ng-eks-01: Creating...

aws_iam_role.ditwl-role-eks-01: Creating...

aws_iam_role.ditwl-role-eks-01: Creation complete after 3s [id=ditwl-role-eks-01]

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy: Creating...

aws_iam_role.ditwl-role-ng-eks-01: Creation complete after 3s [id=eks-node-group-example]

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy: Creating...

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy: Creating...

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly: Creating...

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy: Creation complete after 1s [id=ditwl-role-eks-01-20231126115841412300000001]

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKS_CNI_Policy: Creation complete after 1s [id=eks-node-group-example-20231126115841448000000002]

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEC2ContainerRegistryReadOnly: Creation complete after 1s [id=eks-node-group-example-20231126115841857000000003]

aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSWorkerNodePolicy: Creation complete after 1s [id=eks-node-group-example-20231126115841858200000004]

aws_vpc.ditlw-vpc: Still creating... [10s elapsed]

aws_vpc.ditlw-vpc: Creation complete after 15s [id=vpc-0df03a7e940029420]

aws_internet_gateway.ditwl-ig: Creating...

aws_subnet.ditwl-sn-zb-pro-pub-04: Creating...

aws_subnet.ditwl-sn-za-pro-pub-00: Creating...

aws_internet_gateway.ditwl-ig: Creation complete after 2s [id=igw-056ff063179860748]

aws_route_table.ditwl-rt-pub-main: Creating...

aws_route_table.ditwl-rt-pub-main: Creation complete after 3s [id=rtb-029faee2cc81f5cfd]

aws_main_route_table_association.ditwl-rta-default: Creating...

aws_main_route_table_association.ditwl-rta-default: Creation complete after 1s [id=rtbassoc-05bf1d46d417b061a]

aws_subnet.ditwl-sn-zb-pro-pub-04: Still creating... [10s elapsed]

aws_subnet.ditwl-sn-za-pro-pub-00: Still creating... [10s elapsed]

aws_subnet.ditwl-sn-za-pro-pub-00: Creation complete after 12s [id=subnet-0555a8d5366f3260f]

aws_subnet.ditwl-sn-zb-pro-pub-04: Creation complete after 13s [id=subnet-0b33ac7a7690bcec2]

aws_eks_cluster.ditwl-eks-01: Creating...

aws_eks_cluster.ditwl-eks-01: Still creating... [10s elapsed]

aws_eks_cluster.ditwl-eks-01: Still creating... [20s elapsed]

aws_eks_cluster.ditwl-eks-01: Still creating... [30s elapsed]

...

aws_eks_cluster.ditwl-eks-01: Still creating... [7m40s elapsed]

aws_eks_cluster.ditwl-eks-01: Creation complete after 7m46s [id=ditwl-eks-01]

aws_eks_node_group.ditwl-eks-ng-eks-01: Creating...

aws_eks_node_group.ditwl-eks-ng-eks-01: Still creating... [10s elapsed]

...

aws_eks_node_group.ditwl-eks-ng-eks-01: Still creating... [2m40s elapsed]

aws_eks_node_group.ditwl-eks-ng-eks-01: Creation complete after 2m45s [id=ditwl-eks-01:ditwl-eks-ng-eks-01]

Apply complete! Resources: 14 added, 0 changed, 0 destroyed.Adding the EKS cluster to kubenconfig file allows Kubernetes tools to access the cluster. AWS provides a command to update the kubenconfig.

A kubeconfig is a configuration file used by kubectl, the command-line tool for interacting with Kubernetes clusters. This file stores information about clusters, authentication details, context, and other settings needed to communicate with a Kubernetes cluster. It specifies the cluster's API server, authentication methods (such as certificates or tokens), and the default namespace for operations. The kubeconfig file allows users to manage multiple clusters and switch between them easily using kubectl.

We will use the AWS CLI to update the kubenconfig file with the access information needed to access the cluster, run aws eks update-kubeconfig specifying the region and the name of the EKS cluster, in this case: the region is us-east-1 and the EKS Cluster Name is ditwl-eks-01.

$ aws eks update-kubeconfig --region us-east-1 --name ditwl-eks-01 Added new context arn:aws:eks:us-east-1:123456789:cluster/ditwl-eks-01 to /home/jruiz/.kube/config

Test the configuration by requesting all the resources from the EKS Cluster, run kubectl get all , and kubectl describe service/kubernetes to get detailed information from the EKS control plane service.

$ kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 156m

$ kubectl describe service/kubernetes

Name: kubernetes

Namespace: default

Labels: component=apiserver

provider=kubernetes

Annotations: <none>

Selector: <none>

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.0.1

IPs: 10.100.0.1

Port: https 443/TCP

TargetPort: 443/TCP

Endpoints: 172.21.1.46:443,172.21.4.127:443

Session Affinity: None

Events: <none>Kubectl shows two endpoints whose IPs 172.21.1.46 ,172.21.4.127 correspond to the subnets created before. AWS runs Amazon EKS (Elastic Kubernetes Service) endpoints in different availability zones to enhance the service's availability and fault tolerance.

There are many ways to deploy applications to an EKS Cluster, for example:

kubectl applyWe will use the Kubernetes command-line.

Create a manifest file named color-blue.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dep-color-blue

labels: #Labels for de deployment app: color and color: blue

app: color

color: blue

spec:

selector:

matchLabels: #Deploy in a POD that has labels app: color and color: blue

app: color

color: blue

replicas: 1

template: #For the creation of the pod

metadata:

labels: #Tag the POD with labels app: color and color: blue

app: color

color: blue

spec:

containers:

- name: color-blue

image: itwonderlab/color

resources:

limits:

cpu: "1"

memory: "100Mi"

requests:

cpu: "0.250"

memory: "50Mi"

env:

- name: COLOR

value: "blue"

ports:

- name: http2-web

containerPort: 8080Deploy de Application and check the deployment

kubectl apply -f color-blue.yaml to deploy the application.kubectl get pods to get basic details of the running pods and the assigned pod name.kubectl describe pod/NAME_OF_POD with the name from the previous command. $ kubectl apply -f color-blue.yaml

deployment.apps/dep-color-blue created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

dep-color-blue-69c6985588-6s4gj 1/1 Running 0 4m18s

$ kubectl describe pod/dep-color-blue-69c6985588-6s4gj

Name: dep-color-blue-69c6985588-6s4gj

Namespace: default

Priority: 0

Service Account: default

Node: ip-172-21-1-166.ec2.internal/172.21.1.166

Start Time: Sun, 26 Nov 2023 20:19:32 +0800

Labels: app=color

color=blue

pod-template-hash=69c6985588

Annotations: <none>

Status: Running

IP: 172.21.1.118

IPs:

IP: 172.21.1.118

Controlled By: ReplicaSet/dep-color-blue-69c6985588

Containers:

color-blue:

Container ID: containerd://b4dc93d0560d405200f4453bec6462120d2b483275445c428bff61d146e23c3c

Image: itwonderlab/color

Image ID: docker.io/itwonderlab/color@sha256:7f17f34b41590a7684b4768b8fc4ea8d3d5f111c37d934c1e5fe5ea3567edbec

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 26 Nov 2023 20:19:33 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 100Mi

Requests:

cpu: 250m

memory: 50Mi

Environment:

COLOR: blue

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-flwtc (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-flwtc:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 8m13s default-scheduler Successfully assigned default/dep-color-blue-69c6985588-6s4gj to ip-172-21-1-166.ec2.internal

Normal Pulling 8m13s kubelet Pulling image "itwonderlab/color"

Normal Pulled 8m12s kubelet Successfully pulled image "itwonderlab/color" in 867ms (867ms including waiting)

Normal Created 8m12s kubelet Created container color-blue

Normal Started 8m12s kubelet Started container color-bluIn a production environment a Load Balancer will be used to publish and balance the container/s, for testing kubectl provider a port forward funtionality.

kubectl port-forward <pod-name> <local-port>:<remote-port> is a command that allows you to create a secure tunnel between your local machine and a pod running on a Kubernetes cluster.

Get the name of the pod with kubectl get pods command.

$ kubectl port-forward pods/dep-color-blue-69c6985588-6s4gj 8080:8080 Forwarding from 127.0.0.1:8080 -> 8080 Forwarding from [::1]:8080 -> 8080 Handling connection for 8080

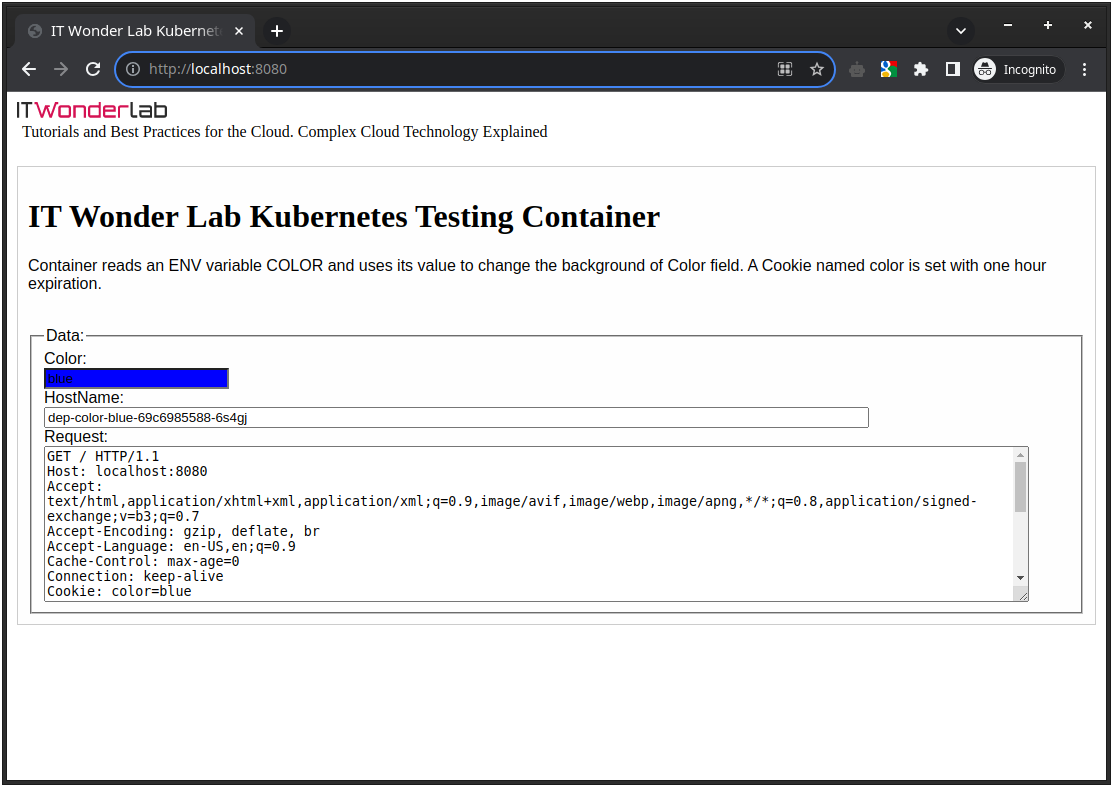

Open a browser and visit http://localhost:8080/, the Container Web Page is shown:

In an Amazon EKS, a Kubernetes LoadBalancer service type exposes the service using an Elastic Load Balancer (ELB) provided by AWS. EKS interacts with the AWS to provision an AWS Elastic Load Balancer (ELB) to distribute incoming traffic to the pods associated with the service.

Create a file named color-blue-load-balancer.yaml defining the LoadBalancer service:

Apply the change to the EKS Cluster:

$ kubectl apply -f color-blue-load-balancer.yaml service/color-service-lb created

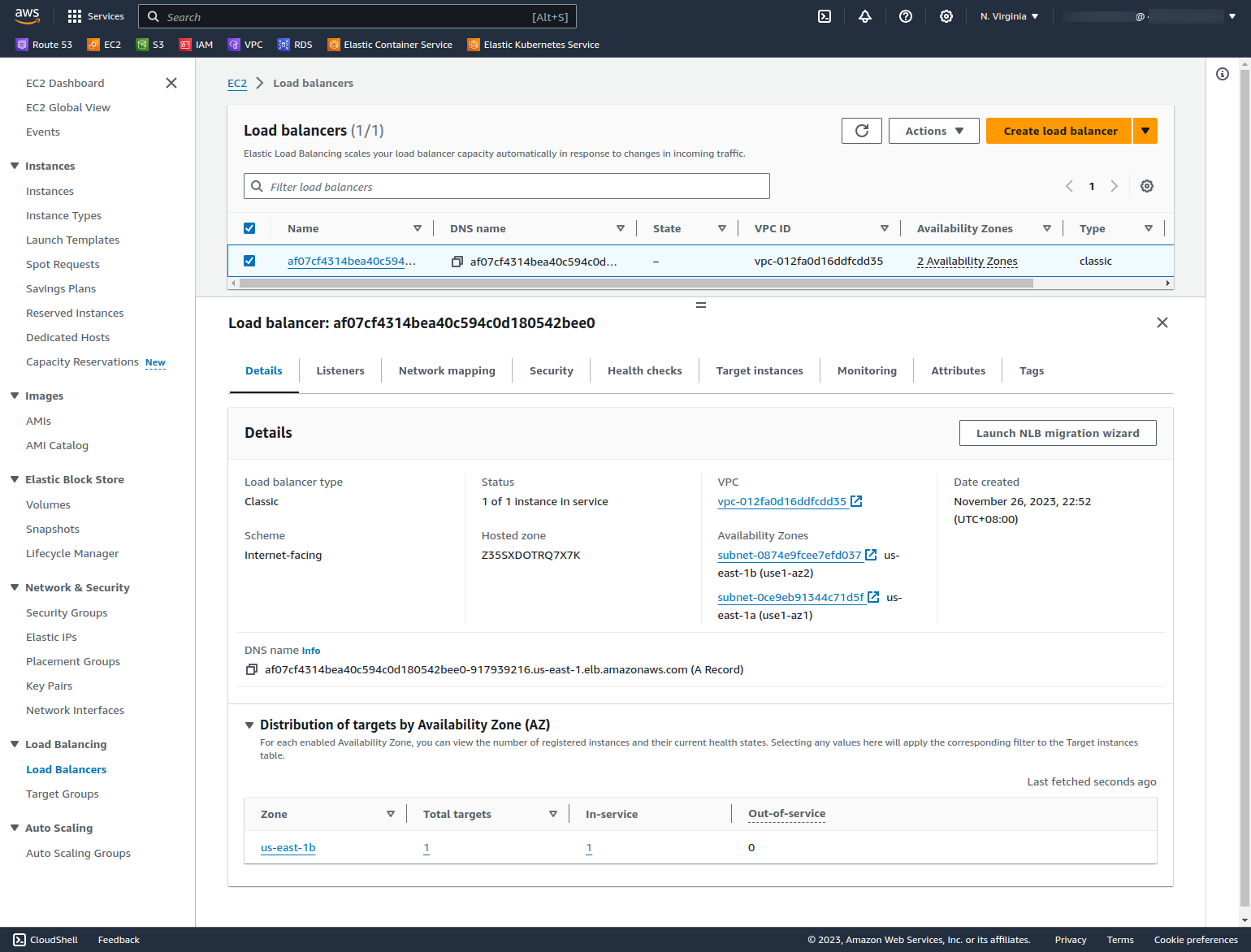

Get the URL assigned by AWS to LoadBalancer by quering the Kubernetes services with kubectl get service:

$ kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE color-service-lb LoadBalancer 10.100.93.223 aa6ac8df5fb4d4be39480712e325f27f-773750948.us-east-1.elb.amazonaws.com 8080:30299/TCP 7m10s kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 46m

Check in the AWS Console that a new ALB has been created

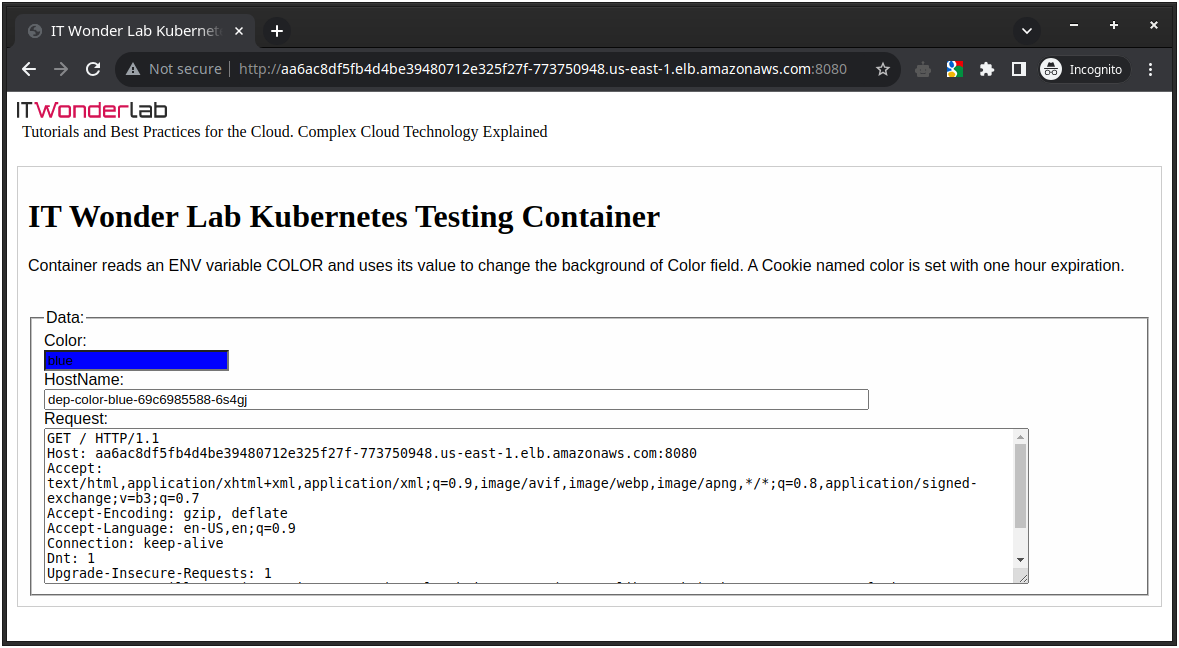

Access the webpage

Open http://aa6ac8df5fb4d4be39480712e325f27f-773750948.us-east-1.elb.amazonaws.com:8080/

Please note that executing the tutorial steps may result in AWS usage charges. Ensure to review your AWS account and associated services to avoid incurring any unintended costs during or after the tutorial.

Before removing the infrastructure with Terraform make sure non-terraform managed infrastructure (like the LoadBalancer) has been removed from the Cluster to avoid dependencies.

Check the Kubernetes cluster with kubectl get all and remove all external services.

$ kubectl get all NAME READY STATUS RESTARTS AGE pod/dep-color-blue-69c6985588-nkf8h 1/1 Running 0 4m39s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/color-service-lb LoadBalancer 10.100.138.209 ae942cf1e5252488e9682e1dcf02efdc-2033990830.us-east-1.elb.amazonaws.com 8080:32082/TCP 6m42s service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 17m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/dep-color-blue 1/1 1 1 4m40s NAME DESIRED CURRENT READY AGE replicaset.apps/dep-color-blue-69c6985588 1 1 1 4m41s

Run kubectl delete -f color-blue.yaml to deploy the application and kubectl delete -f color-blue-load-balancer.yaml to deploy the Load Balancer.

kubectl delete -f color-blue.yaml kubectl delete -f color-blue-load-balancer.yaml

Run the destroy sub-comands using terraform or opentofu. (it can take up to 20 minutes)

$ tofu destroy data.aws_iam_policy_document.ditwl-ipd-eks-01: Reading... data.aws_iam_policy_document.ditwl-ipd-ng-eks-01: Reading... aws_vpc.ditlw-vpc: Refreshing state... [id=vpc-012fa0d16ddfcdd35] data.aws_iam_policy_document.ditwl-ipd-eks-01: Read complete after 0s [id=3552664922] data.aws_iam_policy_document.ditwl-ipd-ng-eks-01: Read complete after 0s [id=2851119427] aws_iam_role.ditwl-role-ng-eks-01: Refreshing state... [id=eks-node-group-example] aws_iam_role.ditwl-role-eks-01: Refreshing state... [id=ditwl-role-eks-01] ... Plan: 0 to add, 0 to change, 14 to destroy. Do you really want to destroy all resources? OpenTofu will destroy all your managed infrastructure, as shown above. There is no undo. Only 'yes' will be accepted to confirm. Enter a value: yes aws_main_route_table_association.ditwl-rta-default: Destroying... [id=rtbassoc-0ab3f4326406756c4] aws_eks_node_group.ditwl-eks-ng-eks-01: Destroying... [id=ditwl-eks-01:ditwl-eks-ng-eks-01] aws_main_route_table_association.ditwl-rta-default: Destruction complete after 3s aws_route_table.ditwl-rt-pub-main: Destroying... [id=rtb-01025f7642cc2bb39] aws_route_table.ditwl-rt-pub-main: Destruction complete after 4s aws_internet_gateway.ditwl-ig: Destroying... [id=igw-081ae03bb057548b0] aws_internet_gateway.ditwl-ig: Destruction complete after 6m45s ... aws_eks_node_group.ditwl-eks-ng-eks-01: Destruction complete after 8m36s aws_iam_role.ditwl-role-ng-eks-01: Destruction complete after 2s .. aws_eks_cluster.ditwl-eks-01: Destruction complete after 1m44s aws_iam_role_policy_attachment.ditwl-role-eks-01-policy-attachment-AmazonEKSClusterPolicy: Destroying... [id=ditwl-role-eks-01-20231126142636871500000004] ... aws_vpc.ditlw-vpc: Destroying... [id=vpc-012fa0d16ddfcdd35] aws_vpc.ditlw-vpc: Destruction complete after 3s Destroy complete! Resources: 14 destroyed.

Once an EC2 instance (from the EKS Node Group) has been created it needs to connect and enroll into the Kubernetes cluster. If it can't connect or enrollment fails an error will be shown after at least 20 minutes:

Error: waiting for EKS Node Group (ditwl-eks-01:ditwl-eks-ng-eks-01) to create: unexpected state 'CREATE_FAILED', wanted target 'ACTIVE'. last error: 1 error occurred:

│ * i-0f7df2df721f28b1f: NodeCreationFailure: Instances failed to join the kubernetes clusterReview the security groups and the Role association to the EKS Node Group.

Some resources, such as the LoadBalancer created using a Kubernetes deployment descriptor, are generated outside of Terraform. For instance, in an EKS environment, AWS interacts to provision an AWS Elastic Load Balancer (ELB), forming a dependency with the VPC. This dependency prevents the VPC from being destroyed by Terraform.

Error: deleting EC2 VPC (vpc-0df03a7e940029420): operation error EC2: DeleteVpc, https response error StatusCode: 400, RequestID: 51b3a990-ced1-430f-ba10-16d41513c235, api error DependencyViolation: The vpc 'vpc-0df03a7e940029420' has dependencies and cannot be deleted.

Remove the resources using the AWS console and next time make sure to delete the Load Balancers using kubectl before destroying the EKS Cluster with Terraform.

Explore Terraform modularization, AWS autoscaling, and CI/CD. Use Kubernetes locally for the development of containers by Installing K3s as a local Kubernetes cluster.

IT Wonder Lab tutorials are based on the diverse experience of Javier Ruiz, who founded and bootstrapped a SaaS company in the energy sector. His company, later acquired by a NASDAQ traded company, managed over €2 billion per year of electricity for prominent energy producers across Europe and America. Javier has over 25 years of experience in building and managing IT companies, developing cloud infrastructure, leading cross-functional teams, and transitioning his own company from on-premises, consulting, and custom software development to a successful SaaS model that scaled globally.

Are you looking for cloud automation best practices tailored to your company?