Learn how to launch a Postgres database instance in a Kubernetes cluster and safely store its data in a persistent location.

Kubernetes doesn't include internal NFS StorageClass for Persistent Volume Claims anymore. See an updated tutorial using the nfs-subdir-external-provisioner to provide persistent NFS storage for PersistentVolumeClaims where a Postgres server is used as an example.

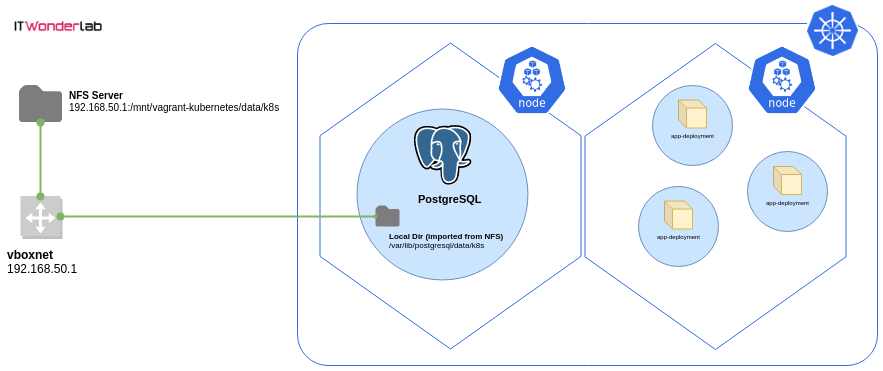

The Postgres instance will have all its data stored in a remote NFS Server to preserve database data across Kubernetes cluster destroy or Postgres Pod unintended disruptions.

This configuration is suitable only for the development and testing of applications in a local development Kubernetes cluster and it's a continuation of the tutorial on How to create a Kubernetes cluster for local development using Vagrant, Ansible, and VirtualBox.

Prerequisites:

Kubernetes containers are mostly used for stateless applications, where each instance is disposable, and does not store data that needs to be persisted across restarts inside the container is needed for client sessions as its storage is ephemeral.

On the contrary, the stateful applications need to store data and have it available between restarts and sessions. Databases like Postgres or MySQL are typical stateful applications.

Kubernetes provides support for many types of volumes depending on the Cloud provider. For our local development Kubernetes Cluster, the most appropriate and easy to configure is an NFS volume.

If you don't have an existing NFS Server, it is easy to create a local NFS server for our Kubernetes Cluster.

Install the Ubuntu needed packages and create a local directory /mnt/vagrant-kubernetes:

sudo apt install nfs-kernel-server sudo mkdir -p /mnt/vagrant-kubernetes sudo mkdir -p /mnt/vagrant-kubernetes/data sudo chown nobody:nogroup /mnt/vagrant-kubernetes sudo chmod 777 /mnt/vagrant-kubernetes

Edit the /etc/exports file to add the exported local directory and limit the share to the CIDR used by the Kubernetes Cluster nodes for external access.

If using the example from the Kubernetes Vagrant Cluster tutorial, use CIDR 192.168.50.0/24 which corresponds to the network used by the VirtualBox machines to access the external networks.

/mnt/vagrant-kubernetes 192.168.50.0/24(rw,sync,no_subtree_check,insecure,no_root_squash)

Start the NFS server

# sudo exportfs -a # sudo systemctl restart nfs-kernel-server # sudo exportfs -v /mnt/vagrant-kubernetes 192.168.50.0/24(rw,wdelay,insecure,no_root_squash,no_subtree_check,sec=sys,rw,insecure,no_root_squash,no_all_squash)

The Postgres database deployment is composed of the following Kubernetes Objects:

(Full deployment file is shown at the end of the tutorial)

The config map is a key-value store that is available to all Kubernetes nodes. The data will be used to set some environment variables of the Postgres container:

apiVersion: v1 kind: ConfigMap metadata: name: psql-itwl-dev-01-cm data: POSTGRES_DB: db POSTGRES_USER: user POSTGRES_PASSWORD: pass PGDATA: /var/lib/postgresql/data/k8s

Defines an NFS PersistentVolume located at:

The volume has the following configuration:

apiVersion: v1

kind: PersistentVolume

metadata:

name: psql-itwl-dev-01-pv

labels: #Labels

app: psql

ver: itwl-dev-01-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.50.1

path: "/mnt/vagrant-kubernetes/data"The PersistentVolumeClaim is the object that is assigned to the deployment. The PersistentVolumeClaim defines how the volume needs to be, and Kubernetes tries to find a corresponding PersistentVolume that satisfies all the requirements.

The PersistentVolumeClaim asks for a volume with the following labels:

An access mode of ReadWriteMany and at least 1 Gigabyte of storage.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: psql-itwl-dev-01-pvc

spec:

selector:

matchLabels: #Select a volume with this labels

app: psql

ver: itwl-dev-01-pv

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1GiA regular application deployment descriptor with the following characteristics:

A single replica will be deployed. Istio is not needed, annotation sidecar.istio.io/inject false prevents the macro to inject the proxy. See Installing Istio in Kubernetes for an explanation about istio.

The container uses Postgres latest image from the public Docker registry (https://hub.docker.com/) and sets:

apiVersion: apps/v1

kind: Deployment

metadata:

name: psql-itwl-dev-01

labels:

app: psql

ver: itwl-dev-01

spec:

replicas: 1

selector:

matchLabels: #Deploy in a POD that has labels app: color and color: blue

app: psql

ver: itwl-dev-01

template: #For the creation of the pod

metadata:

labels:

app: psql

ver: itwl-dev-01

annotations:

sidecar.istio.io/inject: "false"

spec:

containers:

- name: postgres

image: postgres:latest

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 5432

envFrom:

- configMapRef:

name: psql-itwl-dev-01-cm

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: pgdatavol

volumes:

- name: pgdatavol

persistentVolumeClaim:

claimName: psql-itwl-dev-01-pvcSince our local Kubernetes Cluster doesn't have a Cloud provided Load Balancer, we are using the NodePort functionality to access published ports in containers.

The NodePort will publish Postgres in port 30100 of every Kubernetes Master and Nodes:

apiVersion: v1

kind: Service

metadata:

name: postgres-service-np

spec:

type: NodePort

selector:

app: psql

ports:

- name: psql

port: 5432 # Cluster IP http://10.109.199.234:port (docker exposed port)

nodePort: 30100 # (EXTERNAL-IP VirtualBox IPs) 192.168.50.11:nodePort 192.168.50.12:nodePort 192.168.50.13:nodePort

protocol: TCP

Full file postgresql.yaml with all the resources to be deployed in Kubernetes:

apiVersion: v1

kind: ConfigMap

metadata:

name: psql-itwl-dev-01-cm

data:

POSTGRES_DB: db

POSTGRES_USER: user

POSTGRES_PASSWORD: pass

PGDATA: /var/lib/postgresql/data/k8s

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: psql-itwl-dev-01-pv

labels: #Labels

app: psql

ver: itwl-dev-01-pv

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.50.1

path: "/mnt/vagrant-kubernetes/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: psql-itwl-dev-01-pvc

spec:

selector:

matchLabels: #Select a volume with this labels

app: psql

ver: itwl-dev-01-pv

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: psql-itwl-dev-01

labels:

app: psql

ver: itwl-dev-01

spec:

replicas: 1

selector:

matchLabels: #Deploy in a POD that has labels app: color and color: blue

app: psql

ver: itwl-dev-01

template: #For the creation of the pod

metadata:

labels:

app: psql

ver: itwl-dev-01

annotations:

sidecar.istio.io/inject: "false"

spec:

containers:

- name: postgres

image: postgres:latest

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 5432

envFrom:

- configMapRef:

name: psql-itwl-dev-01-cm

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: pgdatavol

volumes:

- name: pgdatavol

persistentVolumeClaim:

claimName: psql-itwl-dev-01-pvc

---

apiVersion: v1

kind: Service

metadata:

name: postgres-service-np

spec:

type: NodePort

selector:

app: psql

ports:

- name: psql

port: 5432 # Cluster IP http://10.109.199.234:port (docker exposed port)

nodePort: 30100 # (EXTERNAL-IP VirtualBox IPs) http://192.168.50.11:nodePort/ http://192.168.50.12:nodePort/ http://192.168.50.13:nodePort/

protocol: TCP

$ kubectl apply -f postgresql.yaml configmap/psql-itwl-dev-01-cm created persistentvolume/psql-itwl-dev-01-pv created persistentvolumeclaim/psql-itwl-dev-01-pvc created deployment.apps/psql-itwl-dev-01 created service/postgres-service-np created

Check that all resources have been created:

$ kubectl get all NAME READY STATUS RESTARTS AGE pod/psql-itwl-dev-01-594c7468c7-p9k9l 1/1 Running 0 6s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d1h service/postgres-service-np NodePort 10.105.135.29 <none> 5432:30100/TCP 6s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/psql-itwl-dev-01 1/1 1 1 6s NAME DESIRED CURRENT READY AGE replicaset.apps/psql-itwl-dev-01-594c7468c7 1 1 1 6s

$ kubectl logs

$ kubectl logs pod/psql-itwl-dev-01-594c7468c7-p9k9l -f

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "en_US.utf8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

fixing permissions on existing directory /var/lib/postgresql/data/k8s ... ok

creating subdirectories ... ok

selecting default max_connections ... 100

selecting default shared_buffers ... 128MB

selecting dynamic shared memory implementation ... posix

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

WARNING: enabling "trust" authentication for local connections

You can change this by editing pg_hba.conf or using the option -A, or

--auth-local and --auth-host, the next time you run initdb.

syncing data to disk ... ok

Success. You can now start the database server using:

pg_ctl -D /var/lib/postgresql/data/k8s -l logfile start

waiting for server to start....2019-06-10 17:59:41.009 UTC [42] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2019-06-10 17:59:41.104 UTC [43] LOG: database system was shut down at 2019-06-10 17:59:40 UTC

2019-06-10 17:59:41.141 UTC [42] LOG: database system is ready to accept connections

done

server started

CREATE DATABASE

/usr/local/bin/docker-entrypoint.sh: ignoring /docker-entrypoint-initdb.d/*

2019-06-10 17:59:48.590 UTC [42] LOG: received fast shutdown request

waiting for server to shut down...2019-06-10 17:59:48.597 UTC [42] LOG: aborting any active transactions

.2019-06-10 17:59:48.603 UTC [42] LOG: background worker "logical replication launcher" (PID 49) exited with exit code 1

2019-06-10 17:59:48.603 UTC [44] LOG: shutting down

2019-06-10 17:59:48.715 UTC [42] LOG: database system is shut down

done

server stopped

PostgreSQL init process complete; ready for start up.

2019-06-10 17:59:48.854 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

2019-06-10 17:59:48.854 UTC [1] LOG: listening on IPv6 address "::", port 5432

2019-06-10 17:59:48.865 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2019-06-10 17:59:48.954 UTC [60] LOG: database system was shut down at 2019-06-10 17:59:48 UTC

2019-06-10 17:59:48.997 UTC [1] LOG: database system is ready to accept connections

Use the Postgres client psql (apt-get install -y postgresql-client)

Password is pass

# psql db -h 192.168.50.11 -p 30100 -U user

Password for user user:

psql (10.8 (Ubuntu 10.8-0ubuntu0.18.10.1), server 11.3 (Debian 11.3-1.pgdg90+1))

WARNING: psql major version 10, server major version 11.

Some psql features might not work.

Type "help" for help.

db=#db=# CREATE TABLE COLOR(

db(# ID SERIAL PRIMARY KEY NOT NULL,

db(# NAME TEXT NOT NULL UNIQUE,

db(# RED SMALLINT NOT NULL,

db(# GREEN SMALLINT NOT NULL,

db(# BLUE SMALLINT NOT NULL

db(# );

CREATE TABLE

db=#

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('GREEN',0,128,0);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('RED',255,0,0);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('BLUE',0,0,255);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('WHITE',255,255,255);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('YELLOW',255,255,0);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('LIME',0,255,0);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('BLACK',255,255,255);

INSERT 0 1

db=# INSERT INTO COLOR (NAME,RED,GREEN,BLUE) VALUES('GRAY',128,128,128);

INSERT 0 1

db=#

db=# SELECT * FROM COLOR;

id | name | red | green | blue

----+--------+-----+-------+------

1 | GREEN | 0 | 128 | 0

2 | RED | 255 | 0 | 0

3 | BLUE | 0 | 0 | 255

4 | WHITE | 255 | 255 | 255

5 | YELLOW | 255 | 255 | 0

6 | LIME | 0 | 255 | 0

7 | BLACK | 255 | 255 | 255

8 | GRAY | 128 | 128 | 128

(8 rows)

db=#

You can now destroy the Postgres instance and create it again, the data is preserved.

$ kubectl delete -f postgresql.yaml configmap "psql-itwl-dev-01-cm" deleted persistentvolume "psql-itwl-dev-01-pv" deleted persistentvolumeclaim "psql-itwl-dev-01-pvc" deleted deployment.apps "psql-itwl-dev-01" deleted service "postgres-service-np" deleted $ kubectl apply -f postgresql.yaml configmap/psql-itwl-dev-01-cm created persistentvolume/psql-itwl-dev-01-pv created persistentvolumeclaim/psql-itwl-dev-01-pvc created deployment.apps/psql-itwl-dev-01 created service/postgres-service-np created $ kubectl get all NAME READY STATUS RESTARTS AGE pod/psql-itwl-dev-01-594c7468c7-7hrzt 0/1 ContainerCreating 0 4s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d1h service/postgres-service-np NodePort 10.100.67.43 <none> 5432:30100/TCP 3s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/psql-itwl-dev-01 0/1 1 0 4s NAME DESIRED CURRENT READY AGE replicaset.apps/psql-itwl-dev-01-594c7468c7 1 1 0 4s $ kubectl logs pod/psql-itwl-dev-01-594c7468c7-7hrzt -f 2019-06-10 18:05:51.456 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432 2019-06-10 18:05:51.457 UTC [1] LOG: listening on IPv6 address "::", port 5432 2019-06-10 18:05:51.466 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432" 2019-06-10 18:05:51.547 UTC [22] LOG: database system was interrupted; last known up at 2019-06-10 18:04:54 UTC 2019-06-10 18:05:53.043 UTC [22] LOG: database system was not properly shut down; automatic recovery in progress 2019-06-10 18:05:53.055 UTC [22] LOG: redo starts at 0/1676B10 2019-06-10 18:05:53.055 UTC [22] LOG: invalid record length at 0/1676BB8: wanted 24, got 0 2019-06-10 18:05:53.055 UTC [22] LOG: redo done at 0/1676B48 2019-06-10 18:05:53.087 UTC [1] LOG: database system is ready to accept connections

Use the psql client to check that the database tables have been preserved:

# psql db -h 192.168.50.11 -p 30100 -U user

Password for user user:

psql (10.8 (Ubuntu 10.8-0ubuntu0.18.10.1), server 11.3 (Debian 11.3-1.pgdg90+1))

WARNING: psql major version 10, server major version 11.

Some psql features might not work.

Type "help" for help.

db=# select * from COLOR;

id | name | red | green | blue

----+--------+-----+-------+------

1 | GREEN | 0 | 128 | 0

2 | RED | 255 | 0 | 0

3 | BLUE | 0 | 0 | 255

4 | WHITE | 255 | 255 | 255

5 | YELLOW | 255 | 255 | 0

6 | LIME | 0 | 255 | 0

7 | BLACK | 255 | 255 | 255

8 | GRAY | 128 | 128 | 128

(8 rows)

db=#

IT Wonder Lab tutorials are based on the diverse experience of Javier Ruiz, who founded and bootstrapped a SaaS company in the energy sector. His company, later acquired by a NASDAQ traded company, managed over €2 billion per year of electricity for prominent energy producers across Europe and America. Javier has over 25 years of experience in building and managing IT companies, developing cloud infrastructure, leading cross-functional teams, and transitioning his own company from on-premises, consulting, and custom software development to a successful SaaS model that scaled globally.

Hi Javier Ruiz,

Thank you very much for your great article, it helps me a lot.

"This configuration is suitable only for the development and testing of applications in a local development Kubernetes cluster...", if I move this architecture to production, is it suitable to use NFS as a storage of database for high traffic read/write performance? How many CCUs can be processed approximately?

Thanks,

Lekcy

Hello Lekcy,

I apologize, but I don't have information on the specific read/write performance of NFS. When dealing with production databases, I tend to opt for mounting data disks using iSCSI, or using RDS in AWS.

In terms of Kubernetes, I lean towards not deploying stateful applications within it. While it's technically feasible using PersistentVolumes, I find that for most scenarios involving databases, factors like replication, backups, and potentially load balancing are critical. In my view, the infrastructure for databases requires a high level of protection. On the other hand, containerized applications are often transient and easily portable. Combining large databases and stateless applications within the same Kubernetes cluster can complicate system upgrades, recreation, and management.

Best regards,

Javier

goot