Kubernetes (a.k.a K8s) is the leading platform for container deployment and management.

In this tutorial, we create a Kubernetes cluster made up of one master server (API, Scheduler, Controller) and n nodes (Pods, kubelet, proxy, and containerd (replacing Docker) ) running project Calico to implement the Kubernetes networking model.

This tutorial is complex and requires constant updates to work with recent Kubernetes releases. For a quick and simple Kubernetes cluster for local development, check the tutorial on Installing a local Kubernetes cluster using K3s a Lightweight Kubernetes.

Vagrant is used to spin up the virtual machines using the Virtualbox hypervisor and to run the Ansible playbooks to configure the Kubernetes cluster environment.

The objective is to provision development environments and learning clusters with many workers.

This Ansible playbook and Vagrantfile for installing Kubernetes have been possible with the help of other blogs (https://kubernetes.io/blog and https://docs.projectcalico.org/). I have included a link to the relevant pages in the source code.

After installing your local Kubernetes cluster, add Istio for load balancing external o internal traffic, controlling failures, retries, routing, applying limits, and monitoring network traffic between services or adding secure communication to your microservices architecture. See the tutorial Installing Istio in Kubernetes with Ansible and Vagrant for local development.

Updates:

hosts: k8s-m-* and hosts: k8s-n-*)containerd instead of Docker (Kubernetes is deprecating Docker as a container runtime after v1.20.)The Ansible playbook follows IT Wonder Lab best practices and can be used to configure a new Kubernetes cluster in a cloud provider or in a different hypervisor as it doesn’t have dependencies with VirtualBox or Vagrant

Click on the play button to see the execution of Vagrant creating a Kubernetes Cluster with Ansible in less than 3 minutes (the screencast shows the configuration of Kubernetes before 1.22 using Docker).

lsb_release -awget https://releases.hashicorp.com/vagrant/2.2.16/vagrant_2.2.16_x86_64.debsudo apt install ./vagrant_2.2.16_x86_64.debsudo apt install virtualboxsudo apt-add-repository --yes --update ppa:ansible/ansible

sudo apt install ansibleThe code used to create a Kubernetes Cluster with Vagrant and Ansible is composed of:

If all prerequisites are met, start the cluster with vagrant up, it takes two minutes to download the boxes, spin the VirtualBox machines and install the Kubernetes cluster software.

cd ~/git/github/ansible-vbox-vagrant-kubernetes vagrant up

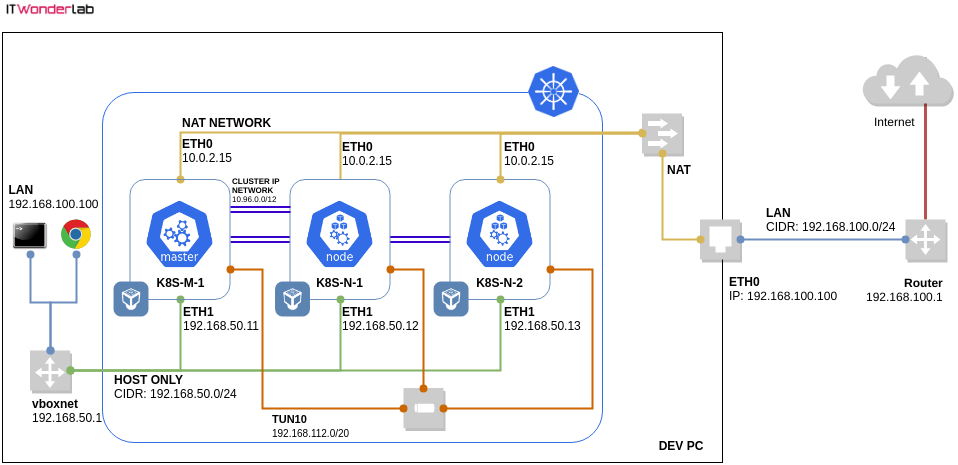

The Kubernetes and VirtualBox network will be composed of at least 3 networks shown in the VirtualBox Kubernetes cluster network diagram:

The VirtualBox HOST ONLY network will be the network used to access the Kubernetes master and nodes from outside the network, it can be considered the Kubernetes public network for our development environment. In the diagram, it is shown in green with connections to each Kubernetes machine and a VirtualBox virtual interface vboxnet:

VirtualBox creates the necessary routes and the vboxnet0 interface:

$ route Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface default _gateway 0.0.0.0 UG 100 0 0 enx106530cde22a link-local 0.0.0.0 255.255.0.0 U 1000 0 0 enx106530cde22a 192.168.50.0 0.0.0.0 255.255.255.0 U 0 0 0 vboxnet0 192.168.100.0 0.0.0.0 255.255.255.0 U 100 0 0 enx106530cde22a

Applications published using a Kubernetes NodePort will be available at all the IPs assigned to the Kubernetes servers. For example, for an application published at NodePort 30000 the following URLs will allow access from outside the Kubernetes cluster:

See how to Publish an Application Outside the Kubernetes Cluster. Accessing the Kubernetes servers by ssh using those IPs is also possible.

$ ssh [email protected] [email protected]'s password: vagrant Welcome to Ubuntu 18.04.1 LTS (GNU/Linux 4.15.0-29-generic x86_64) ... Last login: Mon Apr 22 16:45:17 2019 from 10.0.2.2

The NAT network interface, with the same IP (10.0.2.15) for all servers, is assigned to the first interface or each VirtualBox machine, it is used to access the external world (LAN & Internet) from inside the Kubernetes cluster. In the diagram, it is shown in yellow with connections to each Kubernetes machine and a NAT router that connects to the LAN and the Internet. For example, it is used during the Kubernetes cluster configuration to download the needed packages. Since it is a NAT interface it doesn’t allow inbound connections by default.

The internal connections between Kubernetes PODs use a tunnel network with IPS on the CIDR range 192.168.112.0/20 (as configured by our Ansible playbook) In the diagram, is shown in orange with connections to each Kubernetes machine using tunnel interfaces. Kubernetes will assign IPs from the POD Network to each POD that it creates. POD IPs are not accessible from outside the Kubernetes cluster and will change when PODs are destroyed and created.

The Kubernetes Cluster Network is a private IP range used inside the cluster to give each Kubernetes service a dedicated IP. In the diagram, it is shown in purple. As shown in the following example, a different CLUSTER-IP is assigned to each service:

$ kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-deployment-d7b95894f-2hpjk 1/1 Running 0 5m47s pod/nginx-deployment-d7b95894f-49lrh 1/1 Running 0 5m47s pod/nginx-deployment-d7b95894f-wl497 1/1 Running 0 5m47s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 137m service/nginx-service-np NodePort 10.103.75.9 <none> 8082:30000/TCP 5m47s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-deployment 3/3 3 3 5m47s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-deployment-d7b95894f 3 3 3 5m47s

Cluster-IPs can’t be accessed from outside the Kubernetes cluster, therefore, a NodePort is created (or a LoadBalancer in a Cloud provider) to publish an app. NodePort uses the Kubernetes external IPs. See “Publish an Application outside Kubernetes Cluster” for an example of exposing an App in Kubernetes.

IMAGE_NAME = "bento/ubuntu-20.04"

K8S_NAME = "ditwl-k8s-01"

MASTERS_NUM = 1

MASTERS_CPU = 2

MASTERS_MEM = 2048

NODES_NUM = 2

NODES_CPU = 2

NODES_MEM = 2048

IP_BASE = "192.168.50."

VAGRANT_DISABLE_VBOXSYMLINKCREATE=1

Vagrant.configure("2") do |config|

config.ssh.insert_key = false

(1..MASTERS_NUM).each do |i|

config.vm.define "k8s-m-#{i}" do |master|

master.vm.box = IMAGE_NAME

master.vm.network "private_network", ip: "#{IP_BASE}#{i + 10}"

master.vm.hostname = "k8s-m-#{i}"

master.vm.provider "virtualbox" do |v|

v.memory = MASTERS_MEM

v.cpus = MASTERS_CPU

end

master.vm.provision "ansible" do |ansible|

ansible.playbook = "roles/k8s.yml"

#Redefine defaults

ansible.extra_vars = {

k8s_cluster_name: K8S_NAME,

k8s_master_admin_user: "vagrant",

k8s_master_admin_group: "vagrant",

k8s_master_apiserver_advertise_address: "#{IP_BASE}#{i + 10}",

k8s_master_node_name: "k8s-m-#{i}",

k8s_node_public_ip: "#{IP_BASE}#{i + 10}"

}

end

end

end

(1..NODES_NUM).each do |j|

config.vm.define "k8s-n-#{j}" do |node|

node.vm.box = IMAGE_NAME

node.vm.network "private_network", ip: "#{IP_BASE}#{j + 10 + MASTERS_NUM}"

node.vm.hostname = "k8s-n-#{j}"

node.vm.provider "virtualbox" do |v|

v.memory = NODES_MEM

v.cpus = NODES_CPU

end

node.vm.provision "ansible" do |ansible|

ansible.playbook = "roles/k8s.yml"

#Redefine defaults

ansible.extra_vars = {

k8s_cluster_name: K8S_NAME,

k8s_node_admin_user: "vagrant",

k8s_node_admin_group: "vagrant",

k8s_node_public_ip: "#{IP_BASE}#{j + 10 + MASTERS_NUM}"

}

end

end

end

end

Lines 1 to 13 define the configuration properties of the Kubernetes cluster:

Masters are created in lines 15 to 40, a loop is used to create MASTERS_NUM master machines with the following characteristics:

The name of the machine is created with the expression “k8s-m-#{i}” that identifies a machine as a member of Kubernetes (k8s), a master, and the number (the loop variable i).

MASTERS_MEM and MASTERS_CPU variables are used to set the amount of memory and CPU assigned to the master nodes.

Ansible as provisioner using the playbook “roles/k8s.yml”

The pattern for the hostname will be used by Ansible to select all master nodes with the expression "k8s-m-*" as listed on the file .vagrant/provisioners/ansible/inventory/vagrant_ansible_inventory

Contents of vagrant_ansible_inventory:

# Generated by Vagrant k8s-n-2 ansible_host=127.0.0.1 ansible_port=2201 ansible_user='vagrant' ansible_ssh_private_key_file='/home/jruiz/.vagrant.d/insecure_private_key' k8s-m-1 ansible_host=127.0.0.1 ansible_port=2222 ansible_user='vagrant' ansible_ssh_private_key_file='/home/jruiz/.vagrant.d/insecure_private_key' k8s-n-1 ansible_host=127.0.0.1 ansible_port=2200 ansible_user='vagrant' ansible_ssh_private_key_file='/home/jruiz/.vagrant.d/insecure_private_key'

Ansible extra vars are defined to modify the default values assigned by the Ansible playbooks.

Worker nodes are created in lines 42 to 63 using a similar code as the one used in the masters. The name of the worker nodes is created with the expression “k8s-n-#{j}” that identifies a machine as a member of Kubernetes (k8s), a node, and the number (the loop variable j).

The playbook is executed by the Vagrant Ansible provisioner. It selects hosts using a wildcard (k8s-m-* and k8s-n-*) and applies to the master and node roles respectively.

- hosts: k8s-m-*

become: yes

roles:

- { role: k8s/master}

- hosts: k8s-n-*

become: yes

roles:

- { role: k8s/node}

The k8s/master Ansible playbook is a role that creates the Kubernetes master node, it uses the following configuration that can be redefined in the Vagrantfile (see Vagrantfile Ansible extra vars).

k8s_master_admin_user: "ubuntu" k8s_master_admin_group: "ubuntu" k8s_master_node_name: "k8s-m" k8s_cluster_name: "k8s-cluster" k8s_master_apiserver_advertise_address: "192.168.101.100" k8s_master_pod_network_cidr: "192.168.112.0/20"

Since this is a small Kubernetes cluster the default 192.168.0.0/16 pod network has been changed to 192.168.112.0/20 (a smaller network 192.168.112.0 – 192.168.127.255).

It can be modified at the Ansible master defaults file or at the Vagrantfile as an extra var.

The role requires the installation of some packages that are common to master and worker Kubernetes nodes, since there should be an Ansible role for each task, the Ansible meta folder is used to list role dependencies and pass the value of the variables (each role has its own variables that are assigned at this step).

The master Kubernetes Ansible role has a dependency with k8s/common:

dependencies:

- { role: k8s/common,

k8s_common_admin_user: "{{k8s_master_admin_user}}",

k8s_common_admin_group: "{{k8s_master_admin_group}}"

}

Once the dependencies are met, the playbook for the master role is executed:

#https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/configure-cgroup-driver/

#https://kubernetes.io/docs/reference/config-api/kubeadm-config.v1beta3/

- name: Configuring the kubelet cgroup driver

template:

src: kubeadm-config.yaml

dest: /home/{{ k8s_master_admin_user }}/kubeadm-config.yaml

#https://docs.projectcalico.org/v3.6/getting-started/kubernetes/

#kubeadm init --config /home/vagrant/kubeadm-config.yaml

# --cri-socket /run/containerd/containerd.sock

#--apiserver-advertise-address=192.168.50.11 --apiserver-cert-extra-sans=192.168.50.11 --node-name=k8s-m-1 --pod-network-cidr=192.168.112.0/20

- name: Configure kubectl

command: kubeadm init --config /home/{{ k8s_master_admin_user }}/kubeadm-config.yaml

# --apiserver-advertise-address="{{ k8s_master_apiserver_advertise_address }}" --apiserver-cert-extra-sans="{{ k8s_master_apiserver_advertise_address }}" --node-name="{{ k8s_master_node_name }}" --pod-network-cidr="{{ k8s_master_pod_network_cidr }}"

args:

creates: /etc/kubernetes/manifests/kube-apiserver.yaml

- name: Create .kube dir for {{ k8s_master_admin_user }} user

file:

path: "/home/{{ k8s_master_admin_user }}/.kube"

state: directory

- name: Copy kube config to {{ k8s_master_admin_user }} home .kube dir

copy:

src: /etc/kubernetes/admin.conf

dest: /home/{{ k8s_master_admin_user }}/.kube/config

remote_src: yes

owner: "{{ k8s_master_admin_user }}"

group: "{{ k8s_master_admin_group }}"

mode: 0660

#Rewrite calico replacing defaults

#https://docs.projectcalico.org/getting-started/kubernetes/self-managed-onprem/onpremises

- name: Rewrite calico.yaml

template:

src: calico/3.15/calico.yaml

dest: /home/{{ k8s_master_admin_user }}/calico.yaml

- name: Install Calico (using Kubernetes API datastore)

become: false

command: kubectl apply -f /home/{{ k8s_master_admin_user }}/calico.yaml

# Step 2.6 from https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/

- name: Generate join command

command: kubeadm token create --print-join-command

register: join_command

- name: Copy join command for {{ k8s_cluster_name }} cluster to local file

become: false

local_action: copy content="{{ join_command.stdout_lines[0] }}" dest="./{{ k8s_cluster_name }}-join-command"

Each worker node needs to be added to the cluster by executing the join command that was generated on the master node.

The join command uses kubeadm join with api-server-endpoint (It is located at Kubernetes master server) and the token and a hash to validate the root CA public key.

kubeadm join 192.168.50.11:6443 --token lmnbkq.80h4j8ez0vfktytw --discovery-token-ca-cert-hash sha256:54bbeb6b1a519700ae1f2e53c6f420vd8d4fe2d47ab4dbd7ce1a7f62c457f68a1

The playbook to install a node is very small, it has a dependency on the k8s/common packaged:

dependencies:

- { role: k8s/common,

k8s_common_admin_user: "{{k8s_node_admin_user}}",

k8s_common_admin_group: "{{k8s_node_admin_group}}"

}Specific worker node tasks:

- name: Copy the join command to {{ k8s_cluster_name }} cluster

copy:

src: "./{{ k8s_cluster_name }}-join-command"

dest: /home/{{ k8s_node_admin_user }}/{{ k8s_cluster_name }}-join-command

owner: "{{ k8s_node_admin_user }}"

group: "{{ k8s_node_admin_group }}"

mode: 0760

- name: Join the node to cluster {{ k8s_cluster_name }}

command: sh /home/{{ k8s_node_admin_user }}/{{ k8s_cluster_name }}-join-command

The k8s/common Ansible role is used by the Kubernetes master and worker nodes Ansible playbooks.

Using the Ansible meta folder, the add_packages is added as a dependency. The add_packages will install the packages listed at the Ansible variable k8s_common_add_packages_names along with its corresponding repositories and public keys.

dependencies:

- { role: add_packages,

linux_add_packages_repositories: "{{ k8s_common_add_packages_repositories }}",

linux_add_packages_keys: "{{ k8s_common_add_packages_keys }}",

linux_add_packages_names: "{{ k8s_common_add_packages_names }}",

linux_remove_packages_names: "{{ k8s_common_remove_packages_names }}"

}Definition of variables:

k8s_common_add_packages_keys:

- key: https://download.docker.com/linux/ubuntu/gpg

- key: https://packages.cloud.google.com/apt/doc/apt-key.gpg

k8s_common_add_packages_repositories:

- repo: "deb [arch=amd64] https://download.docker.com/linux/ubuntu {{ansible_distribution_release}} stable"

- repo: "deb https://apt.kubernetes.io/ kubernetes-xenial main" #k8s not available for Bionic (Ubuntu 18.04)

k8s_common_add_packages_names:

- name: apt-transport-https

- name: curl

- name: containerd.io

- name: kubeadm

- name: kubelet

- name: kubectl

k8s_common_remove_packages_names:

- name:

k8s_common_modprobe:

- name: overlay

- name: br_netfilter

k8s_common_sysctl:

- name: net.bridge.bridge-nf-call-iptables

value: 1

- name: net.ipv4.ip_forward

value: 1

- name: net.bridge.bridge-nf-call-ip6tables

value: 1

k8s_common_admin_user: "ubuntu"

k8s_common_admin_group: "ubuntu"

The Ansible playbook for k8s/common: it installs common Kubernetes components for all nodes (master and workers).

- name: Remove current swaps from fstab

lineinfile:

dest: /etc/fstab

regexp: '^/[\S]+\s+none\s+swap '

state: absent

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb > 0

- name: Configure containerd.conf modules

template:

src: etc/modules-load.d/containerd.conf

dest: /etc/modules-load.d/containerd.conf

- name: Load containerd kernel modules

modprobe:

name: "{{ item.name }}"

state: present

loop: "{{ k8s_common_modprobe }}"

- name: Configure kubernetes-cri sys params

sysctl:

name: "{{ item.name }}"

value: "{{ item.value }}"

state: present

reload: yes

loop: "{{ k8s_common_sysctl }}"

# https://github.com/containerd/containerd/issues/4581

# File etc/containerd/config.toml needs to be deleted before kubeadm init

- name: Configure containerd.conf

template:

src: etc/containerd/config.toml

dest: /etc/containerd/config.toml

notify: restart containerd

- name: Configure node-ip {{ k8s_node_public_ip }} at kubelet

lineinfile:

path: '/etc/systemd/system/kubelet.service.d/10-kubeadm.conf'

line: 'Environment="KUBELET_EXTRA_ARGS=--node-ip={{ k8s_node_public_ip }}"'

regexp: 'KUBELET_EXTRA_ARGS='

insertafter: '\[Service\]'

state: present

notify: restart kubelet

- name: restart containerd

service:

name: containerd

state: restarted

daemon_reload: yes

- name: Delete configuration for containerd.conf (see https://github.com/containerd/containerd/issues/4581)

file:

state: absent

path: /etc/containerd/config.toml

This Ansible role specializes in the installation and removal of packages.

Steps:

---

- name: Add new repositories keys

apt_key:

url='{{item.key}}'

with_items: "{{ linux_add_packages_keys | default([])}}"

when: linux_add_packages_keys is defined and not (linux_add_packages_keys is none or linux_add_packages_keys | trim == '')

register: aptnewkeys

- name: Add new repositories to sources

apt_repository:

repo='{{item.repo}}'

with_items: "{{ linux_add_packages_repositories | default([])}}"

when: linux_add_packages_repositories is defined and not (linux_add_packages_repositories is none or linux_add_packages_repositories | trim == '')

- name: Force update cache if new keys added

set_fact:

linux_add_packages_cache_valid_time: 0

when: aptnewkeys.changed

- name: Remove packages

apt:

name={{ item.name }}

state=absent

with_items: "{{ linux_remove_packages_names | default([])}}"

when: linux_remove_packages_names is defined and not (linux_remove_packages_names is none or linux_remove_packages_names | trim == '')

- name: Install packages

apt:

name={{ item.name }}

state=present

update_cache=yes

cache_valid_time={{linux_add_packages_cache_valid_time}}

with_items: "{{ linux_add_packages_names | default([])}}"

when: linux_add_packages_names is defined and not (linux_add_packages_names is none or linux_add_packages_names | trim == '')

Next Steps:

IT Wonder Lab tutorials are based on the diverse experience of Javier Ruiz, who founded and bootstrapped a SaaS company in the energy sector. His company, later acquired by a NASDAQ traded company, managed over €2 billion per year of electricity for prominent energy producers across Europe and America. Javier has over 25 years of experience in building and managing IT companies, developing cloud infrastructure, leading cross-functional teams, and transitioning his own company from on-premises, consulting, and custom software development to a successful SaaS model that scaled globally.

Unfortunately I also get an error and I'm not sure why containerd can be started on the VM:

TASK [k8s/common : restart containerd] *****************************************

fatal: [k8s-m-1]: FAILED! => {"changed": false, "msg": "Unable to restart service containerd: Job for containerd.service failed because a timeout was exceeded.\nSee \"systemctl status containerd.service\" and \"journalctl -xe\" for details.\n"}

hi i have a question should i use windows as a host?

Hi Asim malik

I haven't tried but it should be possible. A reader already made a PR about Windows. Please check it.

https://github.com/itwonderlab/ansible-vbox-vagrant-kubernetes/pull/4

Best regards,

Javier

Thanks, very illustrative and useful! On arch linux, possibly other, the default allowed range for vbox host networks is 192.168.56.0/24. The script will tell you, and this link https://forums.virtualbox.org/viewtopic.php?t=104357 indicates the default ranges.

To make the excellent example run through vagrant up, I configured

/etc/vbox/networks.conf: to contain

* 192.168.50.0/24

Using the hints as provided with the already provided scripts.

Hi,

Thank you very much for the tutorial 🙂

I have Windows OS as host machine and I did some works so that Vagrantfile works perfectly:

1- Enable WSL in Windows:

From "Turn Windows Features on or off" >> Enable "Windows Subsystem for Linux" >> "Ok" >>resboot

2- install Ubuntu:

From "Microsoft Store" >> Search For "Ubuntu" >> Download and install

3- Run Ubuntu and install "Vagrant" and "Ansible" as standard Linux

4- additional configurations regard running vagrant in WSL machine:

echo 'export VAGRANT_WSL_ENABLE_WINDOWS_ACCESS="1"' >> ~/.bashrc

echo 'export PATH="$PATH:/mnt/c/Program Files/Oracle/VirtualBox"' >> ~/.bashrc

echo 'export PATH="$PATH:/mnt/c/Windows/System32"' >> ~/.bashrc

echo 'export PATH="$PATH:/mnt/c/Windows/System32/WindowsPowerShell/v1.0"' >> ~/.bashrc

5- Clone repo in one of mounted directory like "/mnt/c" or "/mnt/d" so that no synced folder error rised up

6- Change IP_BASE Value in Vagrantfile to "192.168.56."

7- Run vagrant up and everything go smoothly.

8- Error related to K8s cluster:

8-1- disable swap permanently in /etc/fstab

8-2-Error when run kubectl ((I1226 06:04:59.430679 15846 cached_discovery.go:87] failed to write cache to /home/vagrant/.kube/cache/discovery/192.168.56.11_6443/scheduling.k8s.io/v1/serverresources.json due to mkdir /home/vagrant/.kube/cache: permission denied)))

To solve it:

sudo mkdir ~/.kube/cache

sudo chmod 755 -R ~/.kube/cache

sudo chown vagrant:vagrant ~/.kube/cache

Hopefully that help windows users

thank you

Thanks for the wonderful tutorial. Helping me set up lab for my project and learn k8 without wasting any time for installation

Hi Javier,

Thank you so much for posting such a wonderful article. Being new to Kubernetes, I was not sure how to approach. But this article made my day.

Thanks a lot - Roy

Running into the same Error as Mark with "Kube init".

Using Vagrant Version 2.2.18

Thanks Martin

I will check the tutorial and fix any issues.

Hi Martin:

It was announced that Kubernetes was deprecating Docker as a container runtime after v1.20.

The tutorial has been updated to Kubernetes v1.22.0 and it now uses containerd.io instead of Docker.

Source code has been uploaded to GitHub and tutorial "instructions" will be updated accordingly.

Please check the new code and let me know if you find any issues.

Thanks,

Javier

Hey Javier,

its working out of the box now again.

Thanks a lot.

Hi Mark

Thanks, the updates on 10 June, 2021 are for: Vagrant, Ansible, Virtual box. No changes in code where needed.

I can't test it now but will do it in a few days to see if something is broken.

Thanks

Javier

Hey Thanks for this.

I used this a couple of months ago , everything worked fine. Today, I git cloned again and I'm getting errors with kubeadm is unable to initialize cluster. See below snippet..

I noticed that in your updates you mention 10 June, 2021 that you updated dependencies. Is it possible something is broken since that change. I cant see which files you updated in git - did you merge the updates?

I will leave it with you for now.

Cheers

---- snippet -----

TASK [k8s/master : Configure kubectl] ******************************************

fatal: [k8s-m-1]: FAILED! => {"changed": true, "cmd": ["kubeadm", "init", "--apiserver-advertise-address=192.168.55.11", "--apiserver-cert-extra-sans=192.168.55.11", "--node-name=k8s-m-1", "--pod-network-cidr=192.168.112.0/20"], "delta": "0:02:52.130093", "end": "2021-08-05 00:32: ....

Hi Mark:

It was announced that Kubernetes was deprecating Docker as a container runtime after v1.20.

The tutorial has been updated to Kubernetes v1.22.0 and it now uses containerd.io instead of Docker.

Source code has been uploaded to GitHub and tutorial "instructions" will be updated accordingly.

Please check the new code and let me know if you find any issues.

Thanks,

Javier

Thanks a lot, fine and useful description !

Perfect. Very well done.

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

scp -r [email protected]:~/.kube ~/

alias kc=kubectl

kc get nodes

kc get all

And now you've modified your hypervisor into a bastion node as well.

Used it and it worked like a charm, had no issues whatsoever, I always use this for my local setup for K8s. Thanks!

I stumbled on this while having a coffee with friends (my bad) and coud not wait to try it out on my laptop. I tried it out in the evening and it went without a hitch. It took a bit more than three minutes but when it finished and I did a quick check, this was exactly what I had been trying to set up. Today I will have a detailed look at the scripts but right now your post deserves a rating of five *.

Thank you very much.

Worked as advertised! Ok took me maybe an hour, but I was reading, stopping, understanding, and tried it. I'm a developer and this could easily be handed over to other devs to replicate for locale build/test/deploy.

Found this article on doing 'hello world' in python for the 'next stop' on okay what do you do with a k8 deployment. https://kubernetes.io/blog/2019/07/23/get-started-with-kubernetes-using-python/

But I have an error when I try to deploy my 'hello world' test, ie

kubectl get all reports

pod/hello-python-5477d55974-4bdwh 0/1 ErrImageNeverPull 0 12m

I think this is simply something with the deployment.yaml of my test, (ie I'm not sure how to specify in the yaml how k8 is to look at my local docker repo, vrs the one its running in vagrant). Or maybe you tell k8-m-1 where to find the local docker repo??

PS THANK-YOU FOR POSTING THIS AWESOME ARTICLE!

Hi Brian,

Thanks for your words.

The docket repo is accessed using http (not a local file). You need to push the image to a remote public repo.

See my tutorial Istio traffic splitting in Kubernetes for instructions on how to build an image, publish it in a remote repo and configure Kubernetes deployment to use the remote repo.

HI, great work!

is available a git repo for the source ??

i'd try to use ansible_local instead of ansible for using vagrant on Windows and install ansible on guest.

thks

Thanks Antonio

The source code is at https://github.com/ITWonderLab/ansible-vbox-vagrant-kubernetes

How to install kubernetes which specific version such as 1.5?

Hi, thank you for the great tutorial - I am however a bit unsure of which prerequisites (I need Vagrant, Virtualbox, and what else? - and and which versions of them?) I will need to install on my local Ubuntu 18.04 host before going through with this tutorial? Thanks in advance!

Hi MNGA,

Thanks for pointing this out. I have added a short Prerequisites section with needed packages, releases and the URL for installation instructions.

https://www.itwonderlab.com/ansible-kubernetes-vagrant-tutorial/#Prerequisites

I am using Ubuntu 19,04 but you can use Ubuntu 18.04 without a problem.

Thanks a lot, and kudos for the fast reply. That was just what I was looking for - I'll go right ahead and try it out.

Worked like a charm - thanks again 🙂

Very descriptive and useful. I will definitely use it in my future projects. Thanks!